Database, reduce, and analyze GBM data without having to know anything. Curiosity killed the catalog.

- Creates a MongoDB database of GRBs observed by GBM.

- Heuristic algorithms are applied to search for the background regions in the time series of GBM light curves.

- Analysis notebooks can be generated on the fly for both time-instegrated and time-resolved spectral fitting.

Of course, this analysis is highly opinionated.

Animal cruelty.

Assuming you have built a local database (tis possible, see below), just type:

$> get_grb_analysis --grb GRBYYMMDDxxx

magic happens, and then you can look at your locally built GRB analysis notebook.

If you want to do more, go ahead and fit the spectra:

$> get_grb_analysis --grb GRBYYMMDDxxx --run-fit

And your automatic (but mutable) analysis is ready:

The concept behind this is to query the Fermi GBM database for basic trigger info, use this in combination tools such as gbmgeometry to figure out which detectors produce the best data for each GRB, and then figure out preliminary selections / parameters / setups for subsequent analysis.

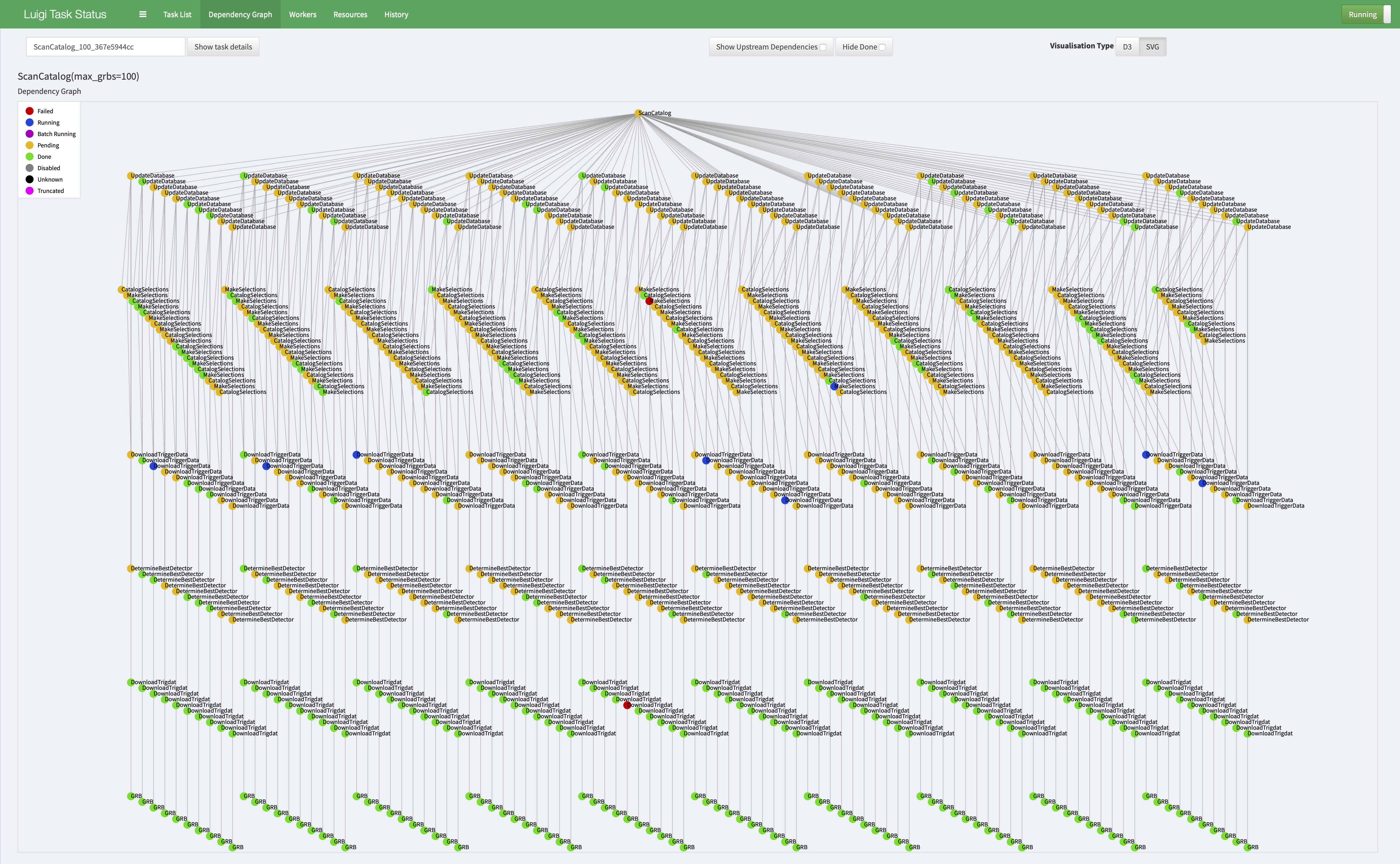

$> build_catalog --n_grbs 100 --port 8989

This process starts with launching luigi which mangages the pipline:

All the of the metadata about the process is stored in a mondoDB database which can be referenced later when building analyses.