By Nikolaos Gkanatsios*, Ayush Jain*, Zhou Xian, Yunchu Zhang, Christopher G. Atkeson, Katerina Fragkiadaki.

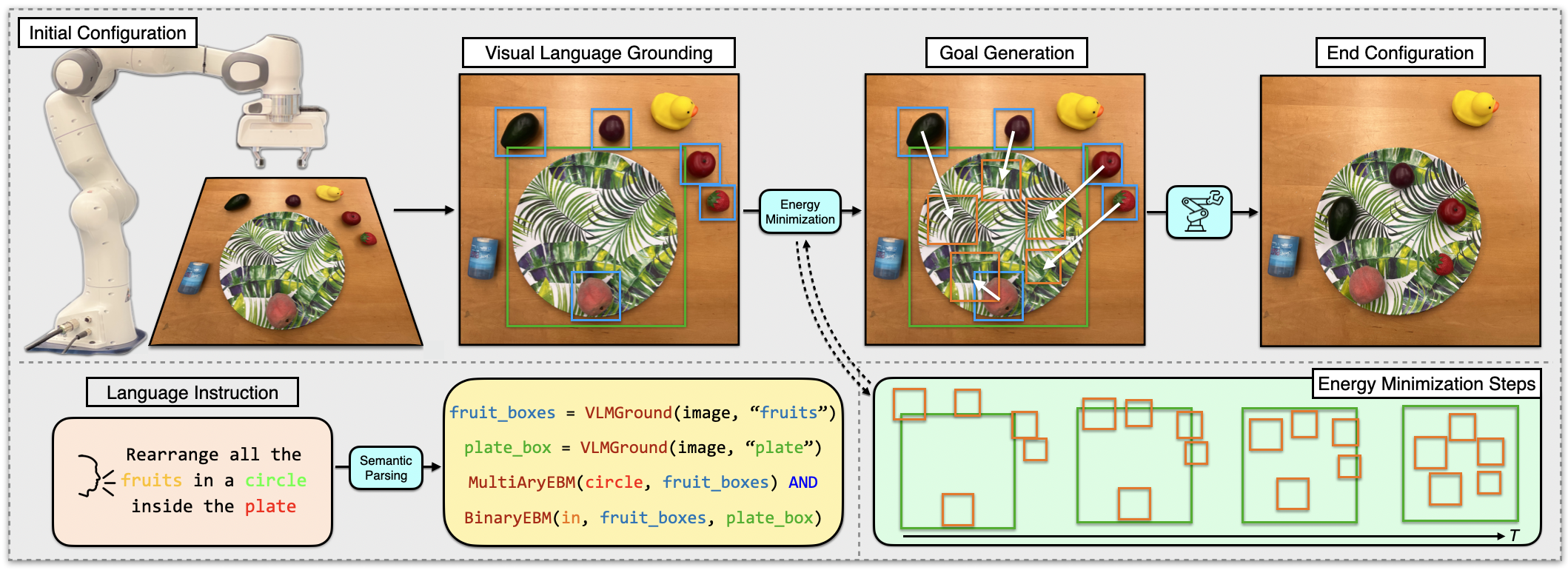

Official implementation of "Energy-based Models are Zero-Shot Planners for Compositional Scene Rearrangement", accepted by RSS 2023.

We showcase the installation for CUDA 11.1 and torch==1.10.2, which is what we used for our experiments.

- conda create -n "srem" python=3.8

- conda activate srem

- pip install -r requirements.txt

- pip install -U torch==1.10.2 torchvision==0.11.3 --extra-index-url https://download.pytorch.org/whl/cu111

- sh scripts/init.sh (Make sure you have gcc>=5.5.0)

For generating simulation data for our benchmarks, execute

- python demos.py

This will generate data for cliport tasks, spatial relations, shapes and compositional benchmarks. If you want to generate data for a specific benchmark, you can comment out the rest from task_list

Download all the needed checkpoints:

We provide scripts for training goal conditioned transporter and evaluating our full model on various benchmarks in scripts folder.

- sh scripts/run_train_composition_one_step.sh # composition-one-step benchmark

- sh scripts/run_train_composition_group.sh # composition-group benchmark

- sh scripts/run_train_single_relations.sh # relations benchmark

- sh scripts/run_train_shapes.sh # shapes benchmark

- sh scripts/run_train_cliport.sh # cliport benchmark

If you followed the Data Preparation section, you should have access to all the checkpoints needed to do the evaluations and reproduce the results.

For training the language parser, first generate the language data by running:

- python data/create_cliport_programs.py

This will create a json file with paired language sentence and expected program.

For actually training it, you can run:

- sh scripts/train_parser.sh

To train the EBMs:

- sh scripts/train_ebm_script.sh

This will train the EBMs on all different concepts we use in our paper. You can isolate a command from this script to train on your concept of interest. Use the --eval flag to run inference and visualize generated samples.

We train the grounding model using the publically available code of BUTD-DETR

Please check our website for qualitative results and a quick overview of this project.

Parts of this code were based on the codebase of CLIPort. The code for grounding module is borrowed from BUTD-DETR. Parts of the EBM training code were based on the codebase of compose-visual-relations.

If you find SREM useful in your research, please consider citing:

@article{gkanatsios2023energy,

title={Energy-based models as zero-shot planners for compositional scene rearrangement},

author={Gkanatsios, Nikolaos and Jain, Ayush and Xian, Zhou and Zhang, Yunchu and Atkeson, Christopher and Fragkiadaki, Katerina},

journal={arXiv preprint arXiv:2304.14391},

year={2023}

}