-

-

Notifications

You must be signed in to change notification settings - Fork 16.5k

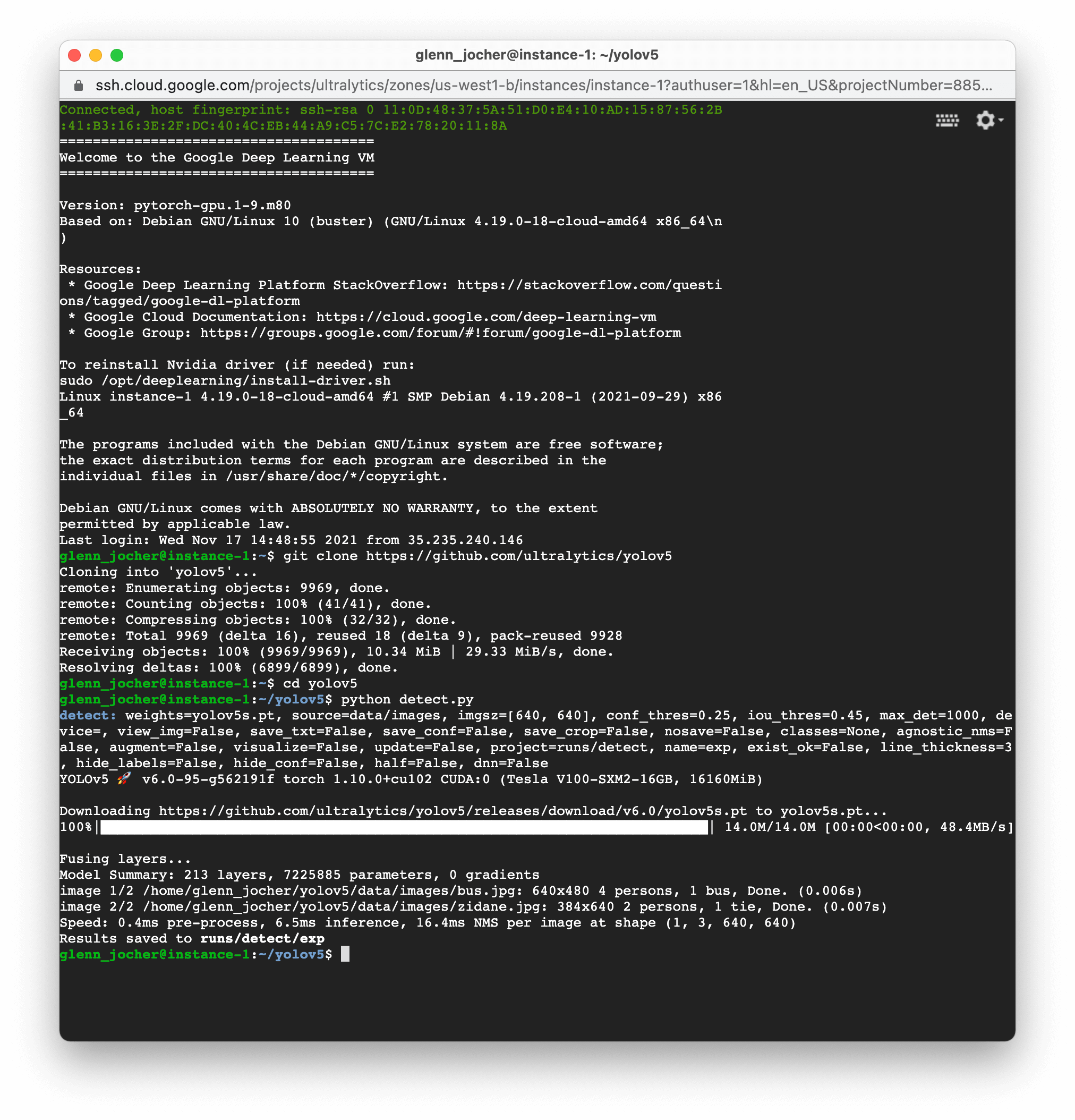

GCP Quickstart

This quickstart guide 📚 helps new users run YOLOv5 🚀 on a Google Cloud Platform (GCP) Deep Learning Virtual Machine (VM) ⭐. New GCP users are eligible for a $300 free credit offer. Other quickstart options for YOLOv5 include our Colab Notebook

Select a Deep Learning VM from the GCP marketplace, select an n1-standard-8 instance (with 8 vCPUs and 30 GB memory), add a GPU of your choice, check 'Install NVIDIA GPU driver automatically on first startup?', and select a 300 GB SSD Persistent Disk for sufficient I/O speed, then click 'Deploy'. All dependencies are included in the preinstalled Anaconda Python environment.

Clone repo and install requirements.txt in a Python>=3.7.0 environment, including PyTorch>=1.7. Models and datasets download automatically from the latest YOLOv5 release.

git clone https://github.com/ultralytics/yolov5 # clone

cd yolov5

pip install -r requirements.txt # installStart training, testing, detecting and exporting YOLOv5 models on your VM!

python train.py # train a model

python val.py --weights yolov5s.pt # validate a model for Precision, Recall and mAP

python detect.py --weights yolov5s.pt --source path/to/images # run inference on images and videos

python export.py --weights yolov5s.pt --include onnx coreml tflite # export models to other formats

Create 64GB of swap memory (to --cache large datasets).

sudo swapoff /swapfile # (optional) clear existing

sudo fallocate -l 64G /swapfile

sudo chmod 600 /swapfile

sudo mkswap /swapfile

sudo swapon /swapfile

free -h # check memoryMount local SSD

lsblk

sudo mkfs.ext4 -F /dev/nvme0n1

sudo mkdir -p /mnt/disks/nvme0n1

sudo mount /dev/nvme0n1 /mnt/disks/nvme0n1

sudo chmod a+w /mnt/disks/nvme0n1

cp -r coco /mnt/disks/nvme0n1© 2024 Ultralytics Inc. All rights reserved.

https://ultralytics.com