-

|

I want to know how accurate is the model for each class, or how can I get the Confusion matrix.Looking forward to getting help |

Beta Was this translation helpful? Give feedback.

Replies: 4 comments 2 replies

-

|

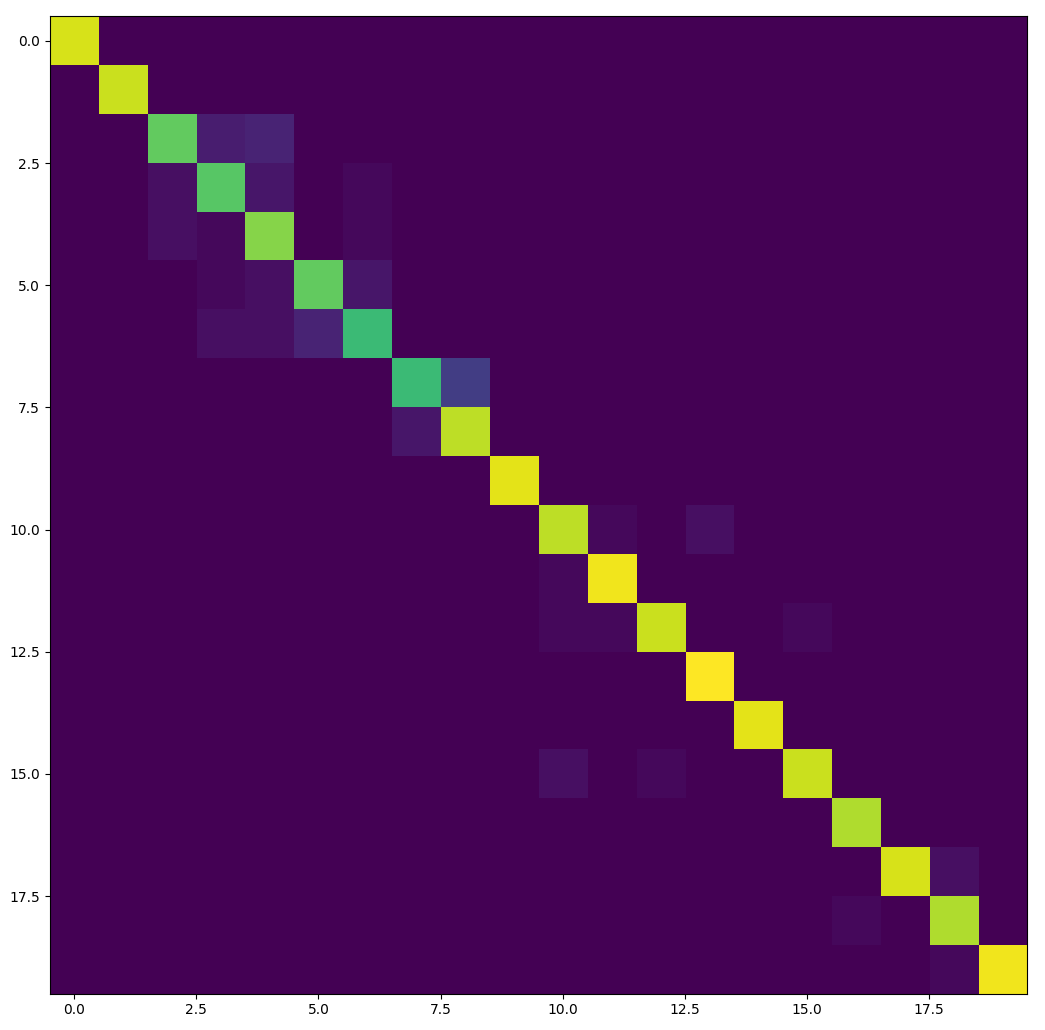

Hello, we haven't exposed the confusion matrix calculation API by now. But you can call it directly. python tools/test.py your_config_file your_checkpoint --out result.pkl --out-items class_scoresAnd then, calculate the confusion matrix: >>> import mmcv

>>> from mmcls.datasets import build_dataset

>>> from mmcls.core.evaluation import calculate_confusion_matrix

>>> cfg = mmcv.Config.fromfile("your_config_file")

>>> dataset = build_dataset(cfg.data.test)

>>> pred = mmcv.load("./result.pkl")['class_scores']

>>> matrix = calculate_confusion_matrix(pred, dataset.get_gt_labels())

>>> print(matrix)

tensor([[47., 0., 0., ..., 0., 0., 0.],

[ 0., 46., 0., ..., 0., 0., 0.],

[ 0., 0., 38., ..., 0., 0., 0.],

...,

[ 0., 0., 0., ..., 36., 0., 0.],

[ 0., 0., 0., ..., 0., 24., 0.],

[ 0., 0., 0., ..., 0., 0., 26.]])

>>> import matplotlib.pyplot as plt

>>> plt.imshow(matrix[:20, :20]) # Visualize the first twenty classes.

>>> plt.show() |

Beta Was this translation helpful? Give feedback.

-

|

Wow, thank you very much |

Beta Was this translation helpful? Give feedback.

-

Beta Was this translation helpful? Give feedback.

Hello, we haven't exposed the confusion matrix calculation API by now. But you can call it directly.

For example, get the classification score of every sample at first:

And then, calculate the confusion matrix: