-## Results and models

+## Results and Models

-### Kinetics-400

+1. Models with * in `Inference results` are ported from the repo [SlowFast](https://github.com/facebookresearch/SlowFast/) and tested on our data, and models in `Training results` are trained in MMAction2 on our data.

+2. The values in columns named after `reference` are copied from paper, and `reference*` are results using [SlowFast](https://github.com/facebookresearch/SlowFast/) repo and trained on our data.

+3. The validation set of Kinetics400 we used consists of 19796 videos. These videos are available at [Kinetics400-Validation](https://mycuhk-my.sharepoint.com/:u:/g/personal/1155136485_link_cuhk_edu_hk/EbXw2WX94J1Hunyt3MWNDJUBz-nHvQYhO9pvKqm6g39PMA?e=a9QldB). The corresponding [data list](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_val_list.txt) (each line is of the format 'video_id, num_frames, label_index') and the [label map](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_class2ind.txt) are also available.

+4. MaskFeat fine-tuning experiment is based on pretrain model from [MMSelfSup](https://github.com/open-mmlab/mmselfsup/tree/dev-1.x/projects/maskfeat_video), and the corresponding reference result is based on pretrain model from [SlowFast](https://github.com/facebookresearch/SlowFast/).

+5. Due to the different versions of Kinetics-400, our training results are different from paper.

+6. Due to the training efficiency, we currently only provide MViT-small training results, we don't ensure other config files' training accuracy and welcome you to contribute your reproduction results.

+7. We use `repeat augment` in MViT training configs following [SlowFast](https://github.com/facebookresearch/SlowFast/). [Repeat augment](https://arxiv.org/pdf/1901.09335.pdf) takes multiple times of data augment for one video, this way can improve the generalization of the model and relieve the IO stress of loading videos. And please note that the actual batch size is `num_repeats` times of `batch_size` in `train_dataloader`.

-| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top1 acc | testing protocol | FLOPs | params | config | ckpt |

+### Inference results

+

+#### Kinetics-400

+

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt |

| :---------------------: | :--------: | :--------: | :----------: | :------: | :------: | :------------------------------: | :------------------------------: | :--------------: | :---: | :----: | :------------------: | :----------------: |

-| 16x4x1 | 224x224 | MViTv2-S\* | From scratch | 81.1 | 94.7 | [81.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M | [config](/configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth) |

+| 16x4x1 | 224x224 | MViTv2-S\* | From scratch | 81.1 | 94.7 | [81.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M | [config](/configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth) |

| 32x3x1 | 224x224 | MViTv2-B\* | From scratch | 82.6 | 95.8 | [82.9](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [95.7](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 225G | 51.2M | [config](/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-base-p244_32x3x1_kinetics400-rgb_20221021-f392cd2d.pth) |

| 40x3x1 | 312x312 | MViTv2-L\* | From scratch | 85.4 | 96.2 | [86.1](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [97.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 3 crop | 2828G | 213M | [config](/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_40x3x1_kinetics400-rgb_20221021-11fe1f97.pth) |

-### Something-Something V2

+#### Something-Something V2

-| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top1 acc | testing protocol | FLOPs | params | config | ckpt |

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt |

| :---------------------: | :--------: | :--------: | :----------: | :------: | :------: | :------------------------------: | :------------------------------: | :--------------: | :---: | :----: | :------------------: | :----------------: |

-| uniform 16 | 224x224 | MViTv2-S\* | K400 | 68.1 | 91.0 | [68.2](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [91.4](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 64G | 34.4M | [config](/configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_u16_sthv2-rgb_20221021-65ecae7d.pth) |

+| uniform 16 | 224x224 | MViTv2-S\* | K400 | 68.1 | 91.0 | [68.2](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [91.4](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 64G | 34.4M | [config](/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_u16_sthv2-rgb_20221021-65ecae7d.pth) |

| uniform 32 | 224x224 | MViTv2-B\* | K400 | 70.8 | 92.7 | [70.5](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [92.7](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 225G | 51.1M | [config](/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-base-p244_u32_sthv2-rgb_20221021-d5de5da6.pth) |

| uniform 40 | 312x312 | MViTv2-L\* | IN21K + K400 | 73.2 | 94.0 | [73.3](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 2828G | 213M | [config](/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_u40_sthv2-rgb_20221021-61696e07.pth) |

-*Models with * are ported from the repo [SlowFast](https://github.com/facebookresearch/SlowFast/) and tested on our data. Currently, we only support the testing of MViT models, training will be available soon.*

+### Training results

+

+#### Kinetics-400

+

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference\* top1 acc | reference\* top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

+| :---------------------: | :--------: | :------: | :-----------: | :------: | :------: | :---------------------------: | :----------------------------: | :---------------: | :---: | :----: | :--------------: | :------------: | :-----------: |

+| 16x4x1 | 224x224 | MViTv2-S | From scratch | 80.6 | 94.7 | [80.8](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M | [config](configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb_20230201-23284ff3.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.log) |

+| 16x4x1 | 224x224 | MViTv2-S | K400 MaskFeat | 81.8 | 95.2 | [81.5](https://github.com/facebookresearch/SlowFast/blob/main/projects/maskfeat/README.md) | [94.9](https://github.com/facebookresearch/SlowFast/blob/main/projects/maskfeat/README.md) | 10 clips x 1 crop | 71G | 36.4M | [config](/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb_20230201-5bced1d0.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.log) |

+

+the corresponding result without repeat augment is as follows:

+

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference\* top1 acc | reference\* top5 acc | testing protocol | FLOPs | params |

+| :---------------------: | :--------: | :------: | :----------: | :------: | :------: | :--------------------------------------------------: | :--------------------------------------------------: | :--------------: | :---: | :----: |

+| 16x4x1 | 224x224 | MViTv2-S | From scratch | 79.4 | 93.9 | [80.8](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M |

+

+#### Something-Something V2

-1. The values in columns named after "reference" are copied from paper

-2. The validation set of Kinetics400 we used consists of 19796 videos. These videos are available at [Kinetics400-Validation](https://mycuhk-my.sharepoint.com/:u:/g/personal/1155136485_link_cuhk_edu_hk/EbXw2WX94J1Hunyt3MWNDJUBz-nHvQYhO9pvKqm6g39PMA?e=a9QldB). The corresponding [data list](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_val_list.txt) (each line is of the format 'video_id, num_frames, label_index') and the [label map](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_class2ind.txt) are also available.

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

+| :---------------------: | :--------: | :------: | :------: | :------: | :------: | :---------------------------: | :----------------------------: | :--------------: | :---: | :----: | :----------------: | :--------------: | :-------------: |

+| uniform 16 | 224x224 | MViTv2-S | K400 | 68.2 | 91.3 | [68.2](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [91.4](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 64G | 34.4M | [config](/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb_20230201-4065c1b9.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.log) |

For more details on data preparation, you can refer to

diff --git a/configs/recognition/mvit/metafile.yml b/configs/recognition/mvit/metafile.yml

index 888fa24732..3170c61bdc 100644

--- a/configs/recognition/mvit/metafile.yml

+++ b/configs/recognition/mvit/metafile.yml

@@ -6,8 +6,8 @@ Collections:

Title: "MViTv2: Improved Multiscale Vision Transformers for Classification and Detection"

Models:

- - Name: mvit-small-p244_16x4x1_kinetics400-rgb

- Config: configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py

+ - Name: mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb_infer

+ Config: configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

In Collection: MViT

Metadata:

Architecture: MViT-small

@@ -24,6 +24,28 @@ Models:

Top 5 Accuracy: 94.7

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth

+ - Name: mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb

+ Config: configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

+ In Collection: MViT

+ Metadata:

+ Architecture: MViT-small

+ Batch Size: 16

+ Epochs: 100

+ FLOPs: 64G

+ Parameters: 34.5M

+ Resolution: 224x224

+ Training Data: Kinetics-400

+ Training Resources: 32 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: Kinetics-400

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 80.6

+ Top 5 Accuracy: 94.7

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb_20230201-23284ff3.pth

+

- Name: mvit-base-p244_32x3x1_kinetics400-rgb

Config: configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

In Collection: MViT

@@ -60,8 +82,8 @@ Models:

Top 5 Accuracy: 94.7

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_40x3x1_kinetics400-rgb_20221021-11fe1f97.pth

- - Name: mvit-small-p244_u16_sthv2-rgb

- Config: configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py

+ - Name: mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb_infer

+ Config: configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

In Collection: MViT

Metadata:

Architecture: MViT-small

@@ -78,6 +100,29 @@ Models:

Top 5 Accuracy: 91.0

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_u16_sthv2-rgb_20221021-65ecae7d.pth

+ - Name: mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb

+ Config: configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

+ In Collection: MViT

+ Metadata:

+ Architecture: MViT-small

+ Batch Size: 16

+ Epochs: 100

+ FLOPs: 64G

+ Parameters: 34.4M

+ Pretrained: Kinetics-400

+ Resolution: 224x224

+ Training Data: SthV2

+ Training Resources: 16 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: SthV2

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 68.2

+ Top 5 Accuracy: 91.3

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb_20230201-4065c1b9.pth

+

- Name: mvit-base-p244_u32_sthv2-rgb

Config: configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

In Collection: MViT

@@ -113,3 +158,26 @@ Models:

Top 1 Accuracy: 73.2

Top 5 Accuracy: 94.0

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_u40_sthv2-rgb_20221021-61696e07.pth

+

+ - Name: mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb

+ Config: configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py

+ In Collection: MViT

+ Metadata:

+ Architecture: MViT-small

+ Batch Size: 32

+ Epochs: 100

+ FLOPs: 71G

+ Parameters: 36.4M

+ Pretrained: Kinetics-400 MaskFeat

+ Resolution: 224x224

+ Training Data: Kinetics-400

+ Training Resources: 8 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: Kinetics-400

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 81.8

+ Top 5 Accuracy: 95.2

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb_20230201-5bced1d0.pth

diff --git a/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py b/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

index b1e186f195..fb552c9329 100644

--- a/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

+++ b/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

@@ -76,13 +76,17 @@

dict(type='PackActionInputs')

]

+repeat_sample = 2

train_dataloader = dict(

batch_size=8,

num_workers=8,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

dataset=dict(

- type=dataset_type,

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ sample_once=True,

ann_file=ann_file_train,

data_prefix=dict(video=data_root),

pipeline=train_pipeline))

@@ -113,19 +117,21 @@

test_evaluator = val_evaluator

train_cfg = dict(

- type='EpochBasedTrainLoop', max_epochs=30, val_begin=1, val_interval=3)

+ type='EpochBasedTrainLoop', max_epochs=200, val_begin=1, val_interval=1)

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

+base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

- type='AdamW', lr=1.6e-3, betas=(0.9, 0.999), weight_decay=0.05))

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

+ paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0),

+ clip_grad=dict(max_norm=1, norm_type=2))

param_scheduler = [

dict(

type='LinearLR',

- start_factor=0.1,

+ start_factor=0.01,

by_epoch=True,

begin=0,

end=30,

@@ -133,9 +139,9 @@

dict(

type='CosineAnnealingLR',

T_max=200,

- eta_min=0,

+ eta_min=base_lr / 100,

by_epoch=True,

- begin=0,

+ begin=30,

end=200,

convert_to_iter_based=True)

]

@@ -147,4 +153,4 @@

# - `enable` means enable scaling LR automatically

# or not by default.

# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

-auto_scale_lr = dict(enable=False, base_batch_size=64)

+auto_scale_lr = dict(enable=False, base_batch_size=512 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py b/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

index c954b60b54..cdbf22dd1f 100644

--- a/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

+++ b/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

@@ -108,7 +108,6 @@

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05))

diff --git a/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py b/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py

index 8c93519914..f2d7ef1419 100644

--- a/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py

+++ b/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py

@@ -13,12 +13,6 @@

type='ActionDataPreprocessor',

mean=[114.75, 114.75, 114.75],

std=[57.375, 57.375, 57.375],

- blending=dict(

- type='RandomBatchAugment',

- augments=[

- dict(type='MixupBlending', alpha=0.8, num_classes=400),

- dict(type='CutmixBlending', alpha=1, num_classes=400)

- ]),

format_shape='NCTHW'),

cls_head=dict(in_channels=1152),

test_cfg=dict(max_testing_views=5))

@@ -78,13 +72,17 @@

dict(type='PackActionInputs')

]

+repeat_sample = 2

train_dataloader = dict(

batch_size=8,

num_workers=8,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

dataset=dict(

- type=dataset_type,

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ sample_once=True,

ann_file=ann_file_train,

data_prefix=dict(video=data_root),

pipeline=train_pipeline))

@@ -119,26 +117,21 @@

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

+base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

- type='AdamW', lr=1.6e-3, betas=(0.9, 0.999), weight_decay=0.05))

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=10e-8),

+ paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0),

+ clip_grad=dict(max_norm=1, norm_type=2))

param_scheduler = [

- dict(

- type='LinearLR',

- start_factor=0.1,

- by_epoch=True,

- begin=0,

- end=30,

- convert_to_iter_based=True),

dict(

type='CosineAnnealingLR',

- T_max=200,

+ T_max=30,

eta_min=0,

by_epoch=True,

begin=0,

- end=200,

+ end=30,

convert_to_iter_based=True)

]

@@ -149,4 +142,4 @@

# - `enable` means enable scaling LR automatically

# or not by default.

# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

-auto_scale_lr = dict(enable=True, base_batch_size=512)

+auto_scale_lr = dict(enable=True, base_batch_size=128 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py b/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py

index b3fde41a78..ea9d54c068 100644

--- a/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py

+++ b/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py

@@ -110,7 +110,6 @@

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05))

diff --git a/configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py b/configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

similarity index 91%

rename from configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py

rename to configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

index 4da89b5a4a..9f6b1cbd6d 100644

--- a/configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py

+++ b/configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

@@ -24,6 +24,7 @@

ann_file_test = 'data/kinetics400/kinetics400_val_list_videos.txt'

file_client_args = dict(io_backend='disk')

+

train_pipeline = [

dict(type='DecordInit', **file_client_args),

dict(type='SampleFrames', clip_len=16, frame_interval=4, num_clips=1),

@@ -70,13 +71,17 @@

dict(type='PackActionInputs')

]

+repeat_sample = 2

train_dataloader = dict(

batch_size=8,

num_workers=8,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

dataset=dict(

- type=dataset_type,

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ sample_once=True,

ann_file=ann_file_train,

data_prefix=dict(video=data_root),

pipeline=train_pipeline))

@@ -107,20 +112,21 @@

test_evaluator = val_evaluator

train_cfg = dict(

- type='EpochBasedTrainLoop', max_epochs=200, val_begin=1, val_interval=3)

+ type='EpochBasedTrainLoop', max_epochs=200, val_begin=1, val_interval=1)

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

- type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05))

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

+ paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0),

+ clip_grad=dict(max_norm=1, norm_type=2))

param_scheduler = [

dict(

type='LinearLR',

- start_factor=0.1,

+ start_factor=0.01,

by_epoch=True,

begin=0,

end=30,

@@ -142,4 +148,4 @@

# - `enable` means enable scaling LR automatically

# or not by default.

# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

-auto_scale_lr = dict(enable=True, base_batch_size=512)

+auto_scale_lr = dict(enable=True, base_batch_size=512 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py b/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py

new file mode 100644

index 0000000000..6fa2a5e654

--- /dev/null

+++ b/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py

@@ -0,0 +1,158 @@

+_base_ = [

+ '../../_base_/models/mvit_small.py', '../../_base_/default_runtime.py'

+]

+

+model = dict(

+ backbone=dict(

+ drop_path_rate=0.1,

+ dim_mul_in_attention=False,

+ pretrained= # noqa: E251

+ 'https://download.openmmlab.com/mmselfsup/1.x/maskfeat/maskfeat_mvit-small_16xb32-amp-coslr-300e_k400/maskfeat_mvit-small_16xb32-amp-coslr-300e_k400_20230131-87d60b6f.pth', # noqa

+ pretrained_type='maskfeat',

+ ),

+ data_preprocessor=dict(

+ type='ActionDataPreprocessor',

+ mean=[114.75, 114.75, 114.75],

+ std=[57.375, 57.375, 57.375],

+ blending=dict(

+ type='RandomBatchAugment',

+ augments=[

+ dict(type='MixupBlending', alpha=0.8, num_classes=400),

+ dict(type='CutmixBlending', alpha=1, num_classes=400)

+ ]),

+ format_shape='NCTHW'),

+ cls_head=dict(dropout_ratio=0., init_scale=0.001))

+

+# dataset settings

+dataset_type = 'VideoDataset'

+data_root = 'data/kinetics400/videos_train'

+data_root_val = 'data/kinetics400/videos_val'

+ann_file_train = 'data/kinetics400/kinetics400_train_list_videos.txt'

+ann_file_val = 'data/kinetics400/kinetics400_val_list_videos.txt'

+ann_file_test = 'data/kinetics400/kinetics400_val_list_videos.txt'

+

+file_client_args = dict(io_backend='disk')

+train_pipeline = [

+ dict(type='DecordInit', **file_client_args),

+ dict(type='SampleFrames', clip_len=16, frame_interval=4, num_clips=1),

+ dict(type='DecordDecode'),

+ dict(type='Resize', scale=(-1, 256)),

+ dict(type='PytorchVideoWrapper', op='RandAugment', magnitude=7),

+ dict(type='RandomResizedCrop'),

+ dict(type='Resize', scale=(224, 224), keep_ratio=False),

+ dict(type='Flip', flip_ratio=0.5),

+ dict(type='RandomErasing', erase_prob=0.25, mode='rand'),

+ dict(type='FormatShape', input_format='NCTHW'),

+ dict(type='PackActionInputs')

+]

+val_pipeline = [

+ dict(type='DecordInit', **file_client_args),

+ dict(

+ type='SampleFrames',

+ clip_len=16,

+ frame_interval=4,

+ num_clips=1,

+ test_mode=True),

+ dict(type='DecordDecode'),

+ dict(type='Resize', scale=(-1, 256)),

+ dict(type='CenterCrop', crop_size=224),

+ dict(type='FormatShape', input_format='NCTHW'),

+ dict(type='PackActionInputs')

+]

+test_pipeline = [

+ dict(type='DecordInit', **file_client_args),

+ dict(

+ type='SampleFrames',

+ clip_len=16,

+ frame_interval=4,

+ num_clips=10,

+ test_mode=True),

+ dict(type='DecordDecode'),

+ dict(type='Resize', scale=(-1, 224)),

+ dict(type='CenterCrop', crop_size=224),

+ dict(type='FormatShape', input_format='NCTHW'),

+ dict(type='PackActionInputs')

+]

+

+repeat_sample = 2

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

+ dataset=dict(

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ ann_file=ann_file_train,

+ data_prefix=dict(video=data_root),

+ pipeline=train_pipeline))

+val_dataloader = dict(

+ batch_size=8,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=dict(

+ type=dataset_type,

+ ann_file=ann_file_val,

+ data_prefix=dict(video=data_root_val),

+ pipeline=val_pipeline,

+ test_mode=True))

+test_dataloader = dict(

+ batch_size=1,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=dict(

+ type=dataset_type,

+ ann_file=ann_file_test,

+ data_prefix=dict(video=data_root_val),

+ pipeline=test_pipeline,

+ test_mode=True))

+

+val_evaluator = dict(type='AccMetric')

+test_evaluator = val_evaluator

+

+train_cfg = dict(

+ type='EpochBasedTrainLoop', max_epochs=100, val_begin=1, val_interval=1)

+val_cfg = dict(type='ValLoop')

+test_cfg = dict(type='TestLoop')

+

+base_lr = 9.6e-3 # for batch size 512

+optim_wrapper = dict(

+ optimizer=dict(

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

+ constructor='LearningRateDecayOptimizerConstructor',

+ paramwise_cfg={

+ 'decay_rate': 0.75,

+ 'decay_type': 'layer_wise',

+ 'num_layers': 16

+ },

+ clip_grad=dict(max_norm=5, norm_type=2))

+

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1 / 600,

+ by_epoch=True,

+ begin=0,

+ end=20,

+ convert_to_iter_based=True),

+ dict(

+ type='CosineAnnealingLR',

+ T_max=80,

+ eta_min_ratio=1 / 600,

+ by_epoch=True,

+ begin=20,

+ end=100,

+ convert_to_iter_based=True)

+]

+

+default_hooks = dict(

+ checkpoint=dict(interval=3, max_keep_ckpts=20), logger=dict(interval=100))

+

+# Default setting for scaling LR automatically

+# - `enable` means enable scaling LR automatically

+# or not by default.

+# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

+auto_scale_lr = dict(enable=True, base_batch_size=512 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py b/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

similarity index 91%

rename from configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py

rename to configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

index 08934b9a5e..1b4135b52e 100644

--- a/configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py

+++ b/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

@@ -2,7 +2,14 @@

'../../_base_/models/mvit_small.py', '../../_base_/default_runtime.py'

]

-model = dict(cls_head=dict(num_classes=174))

+model = dict(

+ backbone=dict(

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint= # noqa: E251

+ 'https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth', # noqa: E501

+ prefix='backbone.')),

+ cls_head=dict(num_classes=174))

# dataset settings

dataset_type = 'VideoDataset'

@@ -91,7 +98,6 @@

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0))

diff --git a/configs/recognition/omnisource/README.md b/configs/recognition/omnisource/README.md

new file mode 100644

index 0000000000..64acf52c35

--- /dev/null

+++ b/configs/recognition/omnisource/README.md

@@ -0,0 +1,79 @@

+# Omnisource

+

+

+

+

+

+## Abstract

+

+

+

+We propose to train a recognizer that can classify images and videos. The recognizer is jointly trained on image and video datasets. Compared with pre-training on the same image dataset, this method can significantly improve the video recognition performance.

+

+

+

+## Results and Models

+

+### Kinetics-400

+

+| frame sampling strategy | scheduler | resolution | gpus | backbone | joint-training | top1 acc | top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

+| :---------------------: | :-----------: | :--------: | :--: | :------: | :------------: | :------: | :------: | :---------------: | :----: | :----: | :---------------------------: | :-------------------------: | :-------------------------: |

+| 8x8x1 | Linear+Cosine | 224x224 | 8 | ResNet50 | ImageNet | 77.30 | 93.23 | 10 clips x 3 crop | 54.75G | 32.45M | [config](/configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb_20230208-61c4be0d.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.log) |

+

+1. The **gpus** indicates the number of gpus we used to get the checkpoint. If you want to use a different number of gpus or videos per gpu, the best way is to set `--auto-scale-lr` when calling `tools/train.py`, this parameter will auto-scale the learning rate according to the actual batch size and the original batch size.

+2. The validation set of Kinetics400 we used consists of 19796 videos. These videos are available at [Kinetics400-Validation](https://mycuhk-my.sharepoint.com/:u:/g/personal/1155136485_link_cuhk_edu_hk/EbXw2WX94J1Hunyt3MWNDJUBz-nHvQYhO9pvKqm6g39PMA?e=a9QldB). The corresponding [data list](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_val_list.txt) (each line is of the format 'video_id, num_frames, label_index') and the [label map](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_class2ind.txt) are also available.

+

+For more details on data preparation, you can refer to [Kinetics400](/tools/data/kinetics/README.md).

+

+## Train

+

+You can use the following command to train a model.

+

+```shell

+python tools/train.py ${CONFIG_FILE} [optional arguments]

+```

+

+Example: train SlowOnly model on Kinetics-400 dataset in a deterministic option with periodic validation.

+

+```shell

+python tools/train.py configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py \

+ --seed=0 --deterministic

+```

+

+We found that the training of this Omnisource model could crash for unknown reasons. If this happens, you can resume training by adding the `--cfg-options resume=True` to the training script.

+

+For more details, you can refer to the **Training** part in the [Training and Test Tutorial](/docs/en/user_guides/4_train_test.md).

+

+## Test

+

+You can use the following command to test a model.

+

+```shell

+python tools/test.py ${CONFIG_FILE} ${CHECKPOINT_FILE} [optional arguments]

+```

+

+Example: test SlowOnly model on Kinetics-400 dataset and dump the result to a pkl file.

+

+```shell

+python tools/test.py configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py \

+ checkpoints/SOME_CHECKPOINT.pth --dump result.pkl

+```

+

+For more details, you can refer to the **Test** part in the [Training and Test Tutorial](/docs/en/user_guides/4_train_test.md).

+

+## Citation

+

+```BibTeX

+@inproceedings{feichtenhofer2019slowfast,

+ title={Slowfast networks for video recognition},

+ author={Feichtenhofer, Christoph and Fan, Haoqi and Malik, Jitendra and He, Kaiming},

+ booktitle={Proceedings of the IEEE international conference on computer vision},

+ pages={6202--6211},

+ year={2019}

+}

+```

diff --git a/configs/recognition/omnisource/metafile.yml b/configs/recognition/omnisource/metafile.yml

new file mode 100644

index 0000000000..af4524e5b0

--- /dev/null

+++ b/configs/recognition/omnisource/metafile.yml

@@ -0,0 +1,28 @@

+Collections:

+ - Name: Omnisource

+ README: configs/recognition/omnisource/README.md

+

+

+Models:

+ - Name: slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb

+ Config: configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py

+ In Collection: SlowOnly

+ Metadata:

+ Architecture: ResNet50

+ Batch Size: 16

+ Epochs: 256

+ FLOPs: 54.75G

+ Parameters: 32.45M

+ Pretrained: None

+ Resolution: short-side 320

+ Training Data: Kinetics-400

+ Training Resources: 8 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: Kinetics-400

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 77.30

+ Top 5 Accuracy: 93.23

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb_20230208-61c4be0d.pth

diff --git a/configs/recognition/omnisource/slowonly_r50_16xb16-8x8x1-256e_imagenet-kinetics400-rgb.py b/configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py

similarity index 98%

rename from configs/recognition/omnisource/slowonly_r50_16xb16-8x8x1-256e_imagenet-kinetics400-rgb.py

rename to configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py

index 05feb2710a..2b28285635 100644

--- a/configs/recognition/omnisource/slowonly_r50_16xb16-8x8x1-256e_imagenet-kinetics400-rgb.py

+++ b/configs/recognition/omnisource/slowonly_r50_8xb16-8x8x1-256e_imagenet-kinetics400-rgb.py

@@ -159,7 +159,7 @@

convert_to_iter_based=True)

]

"""

-The learning rate is for total_batch_size = 16 x 16 (num_gpus x batch_size)

+The learning rate is for total_batch_size = 8 x 16 (num_gpus x batch_size)

If you want to use other batch size or number of GPU settings, please update

the learning rate with the linear scaling rule.

"""

diff --git a/configs/recognition/slowfast/metafile.yml b/configs/recognition/slowfast/metafile.yml

index 94423659d1..7ba12c0e63 100644

--- a/configs/recognition/slowfast/metafile.yml

+++ b/configs/recognition/slowfast/metafile.yml

@@ -30,6 +30,8 @@ Models:

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/slowfast/slowfast_r50_8xb8-4x16x1-256e_kinetics400-rgb/slowfast_r50_8xb8-4x16x1-256e_kinetics400-rgb_20220901-701b0f6f.pth

- Name: slowfast_r50_8xb8-8x8x1-256e_kinetics400-rgb

+ Alias:

+ - slowfast

Config: configs/recognition/slowfast/slowfast_r50_8xb8-8x8x1-256e_kinetics400-rgb.py

In Collection: SlowFast

Metadata:

diff --git a/configs/recognition/tsn/metafile.yml b/configs/recognition/tsn/metafile.yml

index b4734c93a2..e618ed71cc 100644

--- a/configs/recognition/tsn/metafile.yml

+++ b/configs/recognition/tsn/metafile.yml

@@ -53,6 +53,8 @@ Models:

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/tsn/tsn_imagenet-pretrained-r50_8xb32-1x1x5-100e_kinetics400-rgb/tsn_imagenet-pretrained-r50_8xb32-1x1x5-100e_kinetics400-rgb_20220906-65d68713.pth

- Name: tsn_imagenet-pretrained-r50_8xb32-1x1x8-100e_kinetics400-rgb

+ Alias:

+ - TSN

Config: configs/recognition/tsn/tsn_imagenet-pretrained-r50_8xb32-1x1x8-100e_kinetics400-rgb.py

In Collection: TSN

Metadata:

diff --git a/configs/recognition/uniformer/README.md b/configs/recognition/uniformer/README.md

new file mode 100644

index 0000000000..65c224ecc3

--- /dev/null

+++ b/configs/recognition/uniformer/README.md

@@ -0,0 +1,67 @@

+# UniFormer

+

+[UniFormer: Unified Transformer for Efficient Spatiotemporal Representation Learning](https://arxiv.org/abs/2201.04676)

+

+

+

+## Abstract

+

+

+

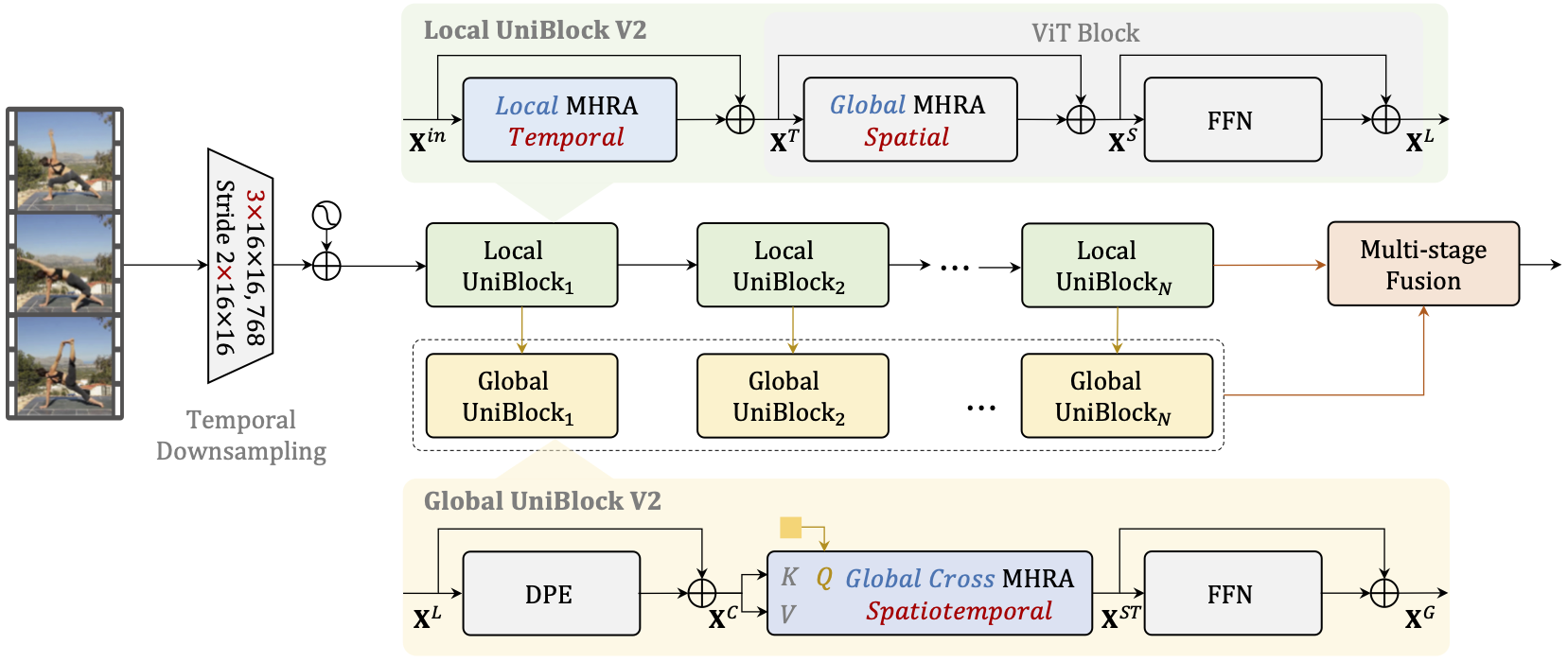

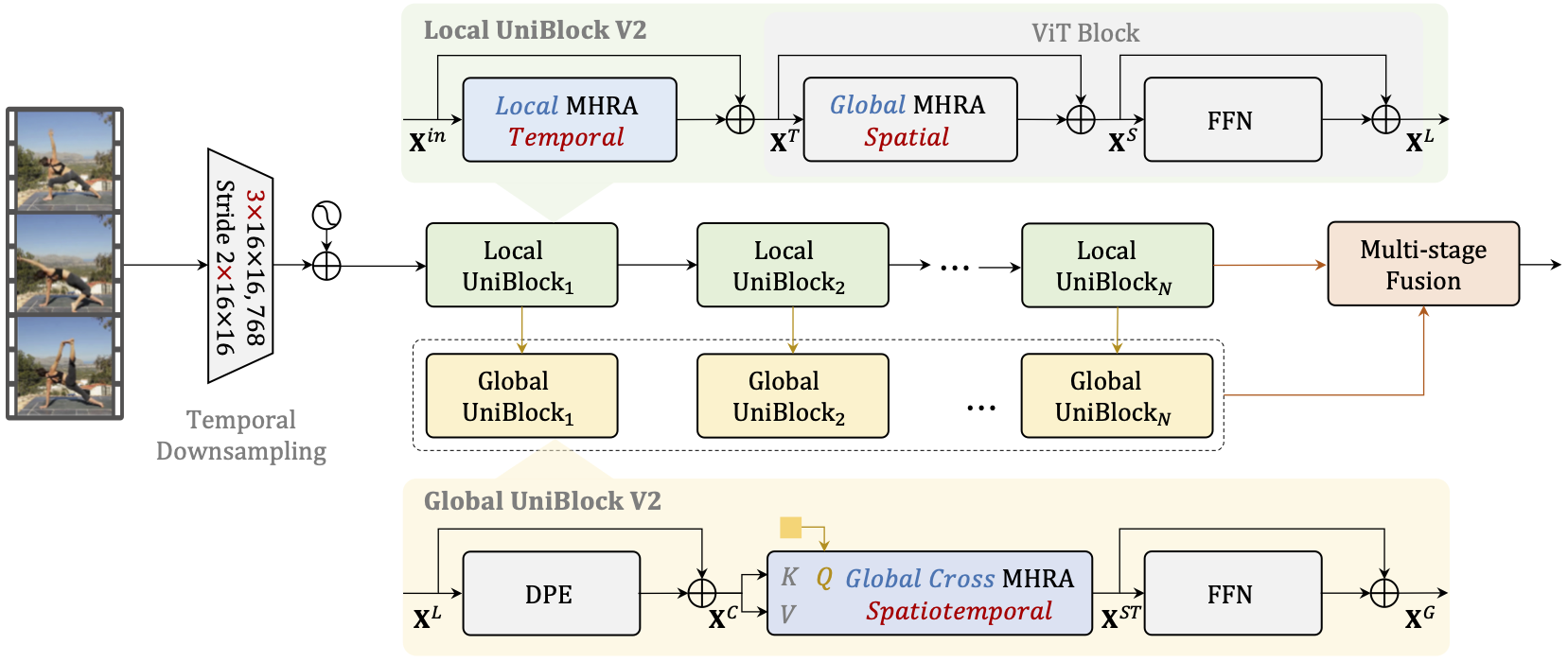

+It is a challenging task to learn rich and multi-scale spatiotemporal semantics from high-dimensional videos, due to large local redundancy and complex global dependency between video frames. The recent advances in this research have been mainly driven by 3D convolutional neural networks and vision transformers. Although 3D convolution can efficiently aggregate local context to suppress local redundancy from a small 3D neighborhood, it lacks the capability to capture global dependency because of the limited receptive field. Alternatively, vision transformers can effectively capture long-range dependency by self-attention mechanism, while having the limitation on reducing local redundancy with blind similarity comparison among all the tokens in each layer. Based on these observations, we propose a novel Unified transFormer (UniFormer) which seamlessly integrates merits of 3D convolution and spatiotemporal self-attention in a concise transformer format, and achieves a preferable balance between computation and accuracy. Different from traditional transformers, our relation aggregator can tackle both spatiotemporal redundancy and dependency, by learning local and global token affinity respectively in shallow and deep layers. We conduct extensive experiments on the popular video benchmarks, e.g., Kinetics-400, Kinetics-600, and Something-Something V1&V2. With only ImageNet-1K pretraining, our UniFormer achieves 82.9%/84.8% top-1 accuracy on Kinetics-400/Kinetics-600, while requiring 10x fewer GFLOPs than other state-of-the-art methods. For Something-Something V1 and V2, our UniFormer achieves new state-of-the-art performances of 60.9% and 71.2% top-1 accuracy respectively.

+

+

+

+

-## Results and models

+## Results and Models

-### Kinetics-400

+1. Models with * in `Inference results` are ported from the repo [SlowFast](https://github.com/facebookresearch/SlowFast/) and tested on our data, and models in `Training results` are trained in MMAction2 on our data.

+2. The values in columns named after `reference` are copied from paper, and `reference*` are results using [SlowFast](https://github.com/facebookresearch/SlowFast/) repo and trained on our data.

+3. The validation set of Kinetics400 we used consists of 19796 videos. These videos are available at [Kinetics400-Validation](https://mycuhk-my.sharepoint.com/:u:/g/personal/1155136485_link_cuhk_edu_hk/EbXw2WX94J1Hunyt3MWNDJUBz-nHvQYhO9pvKqm6g39PMA?e=a9QldB). The corresponding [data list](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_val_list.txt) (each line is of the format 'video_id, num_frames, label_index') and the [label map](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_class2ind.txt) are also available.

+4. MaskFeat fine-tuning experiment is based on pretrain model from [MMSelfSup](https://github.com/open-mmlab/mmselfsup/tree/dev-1.x/projects/maskfeat_video), and the corresponding reference result is based on pretrain model from [SlowFast](https://github.com/facebookresearch/SlowFast/).

+5. Due to the different versions of Kinetics-400, our training results are different from paper.

+6. Due to the training efficiency, we currently only provide MViT-small training results, we don't ensure other config files' training accuracy and welcome you to contribute your reproduction results.

+7. We use `repeat augment` in MViT training configs following [SlowFast](https://github.com/facebookresearch/SlowFast/). [Repeat augment](https://arxiv.org/pdf/1901.09335.pdf) takes multiple times of data augment for one video, this way can improve the generalization of the model and relieve the IO stress of loading videos. And please note that the actual batch size is `num_repeats` times of `batch_size` in `train_dataloader`.

-| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top1 acc | testing protocol | FLOPs | params | config | ckpt |

+### Inference results

+

+#### Kinetics-400

+

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt |

| :---------------------: | :--------: | :--------: | :----------: | :------: | :------: | :------------------------------: | :------------------------------: | :--------------: | :---: | :----: | :------------------: | :----------------: |

-| 16x4x1 | 224x224 | MViTv2-S\* | From scratch | 81.1 | 94.7 | [81.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M | [config](/configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth) |

+| 16x4x1 | 224x224 | MViTv2-S\* | From scratch | 81.1 | 94.7 | [81.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M | [config](/configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth) |

| 32x3x1 | 224x224 | MViTv2-B\* | From scratch | 82.6 | 95.8 | [82.9](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [95.7](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 225G | 51.2M | [config](/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-base-p244_32x3x1_kinetics400-rgb_20221021-f392cd2d.pth) |

| 40x3x1 | 312x312 | MViTv2-L\* | From scratch | 85.4 | 96.2 | [86.1](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [97.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 3 crop | 2828G | 213M | [config](/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_40x3x1_kinetics400-rgb_20221021-11fe1f97.pth) |

-### Something-Something V2

+#### Something-Something V2

-| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top1 acc | testing protocol | FLOPs | params | config | ckpt |

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt |

| :---------------------: | :--------: | :--------: | :----------: | :------: | :------: | :------------------------------: | :------------------------------: | :--------------: | :---: | :----: | :------------------: | :----------------: |

-| uniform 16 | 224x224 | MViTv2-S\* | K400 | 68.1 | 91.0 | [68.2](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [91.4](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 64G | 34.4M | [config](/configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_u16_sthv2-rgb_20221021-65ecae7d.pth) |

+| uniform 16 | 224x224 | MViTv2-S\* | K400 | 68.1 | 91.0 | [68.2](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [91.4](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 64G | 34.4M | [config](/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_u16_sthv2-rgb_20221021-65ecae7d.pth) |

| uniform 32 | 224x224 | MViTv2-B\* | K400 | 70.8 | 92.7 | [70.5](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [92.7](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 225G | 51.1M | [config](/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-base-p244_u32_sthv2-rgb_20221021-d5de5da6.pth) |

| uniform 40 | 312x312 | MViTv2-L\* | IN21K + K400 | 73.2 | 94.0 | [73.3](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.0](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 2828G | 213M | [config](/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_u40_sthv2-rgb_20221021-61696e07.pth) |

-*Models with * are ported from the repo [SlowFast](https://github.com/facebookresearch/SlowFast/) and tested on our data. Currently, we only support the testing of MViT models, training will be available soon.*

+### Training results

+

+#### Kinetics-400

+

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference\* top1 acc | reference\* top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

+| :---------------------: | :--------: | :------: | :-----------: | :------: | :------: | :---------------------------: | :----------------------------: | :---------------: | :---: | :----: | :--------------: | :------------: | :-----------: |

+| 16x4x1 | 224x224 | MViTv2-S | From scratch | 80.6 | 94.7 | [80.8](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M | [config](configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb_20230201-23284ff3.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.log) |

+| 16x4x1 | 224x224 | MViTv2-S | K400 MaskFeat | 81.8 | 95.2 | [81.5](https://github.com/facebookresearch/SlowFast/blob/main/projects/maskfeat/README.md) | [94.9](https://github.com/facebookresearch/SlowFast/blob/main/projects/maskfeat/README.md) | 10 clips x 1 crop | 71G | 36.4M | [config](/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb_20230201-5bced1d0.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.log) |

+

+the corresponding result without repeat augment is as follows:

+

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference\* top1 acc | reference\* top5 acc | testing protocol | FLOPs | params |

+| :---------------------: | :--------: | :------: | :----------: | :------: | :------: | :--------------------------------------------------: | :--------------------------------------------------: | :--------------: | :---: | :----: |

+| 16x4x1 | 224x224 | MViTv2-S | From scratch | 79.4 | 93.9 | [80.8](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [94.6](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 5 clips x 1 crop | 64G | 34.5M |

+

+#### Something-Something V2

-1. The values in columns named after "reference" are copied from paper

-2. The validation set of Kinetics400 we used consists of 19796 videos. These videos are available at [Kinetics400-Validation](https://mycuhk-my.sharepoint.com/:u:/g/personal/1155136485_link_cuhk_edu_hk/EbXw2WX94J1Hunyt3MWNDJUBz-nHvQYhO9pvKqm6g39PMA?e=a9QldB). The corresponding [data list](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_val_list.txt) (each line is of the format 'video_id, num_frames, label_index') and the [label map](https://download.openmmlab.com/mmaction/dataset/k400_val/kinetics_class2ind.txt) are also available.

+| frame sampling strategy | resolution | backbone | pretrain | top1 acc | top5 acc | reference top1 acc | reference top5 acc | testing protocol | FLOPs | params | config | ckpt | log |

+| :---------------------: | :--------: | :------: | :------: | :------: | :------: | :---------------------------: | :----------------------------: | :--------------: | :---: | :----: | :----------------: | :--------------: | :-------------: |

+| uniform 16 | 224x224 | MViTv2-S | K400 | 68.2 | 91.3 | [68.2](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | [91.4](https://github.com/facebookresearch/SlowFast/blob/main/projects/mvitv2/README.md) | 1 clips x 3 crop | 64G | 34.4M | [config](/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb) | [ckpt](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb_20230201-4065c1b9.pth) | [log](https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.log) |

For more details on data preparation, you can refer to

diff --git a/configs/recognition/mvit/metafile.yml b/configs/recognition/mvit/metafile.yml

index 888fa24732..3170c61bdc 100644

--- a/configs/recognition/mvit/metafile.yml

+++ b/configs/recognition/mvit/metafile.yml

@@ -6,8 +6,8 @@ Collections:

Title: "MViTv2: Improved Multiscale Vision Transformers for Classification and Detection"

Models:

- - Name: mvit-small-p244_16x4x1_kinetics400-rgb

- Config: configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py

+ - Name: mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb_infer

+ Config: configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

In Collection: MViT

Metadata:

Architecture: MViT-small

@@ -24,6 +24,28 @@ Models:

Top 5 Accuracy: 94.7

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth

+ - Name: mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb

+ Config: configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

+ In Collection: MViT

+ Metadata:

+ Architecture: MViT-small

+ Batch Size: 16

+ Epochs: 100

+ FLOPs: 64G

+ Parameters: 34.5M

+ Resolution: 224x224

+ Training Data: Kinetics-400

+ Training Resources: 32 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: Kinetics-400

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 80.6

+ Top 5 Accuracy: 94.7

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb_20230201-23284ff3.pth

+

- Name: mvit-base-p244_32x3x1_kinetics400-rgb

Config: configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

In Collection: MViT

@@ -60,8 +82,8 @@ Models:

Top 5 Accuracy: 94.7

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_40x3x1_kinetics400-rgb_20221021-11fe1f97.pth

- - Name: mvit-small-p244_u16_sthv2-rgb

- Config: configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py

+ - Name: mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb_infer

+ Config: configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

In Collection: MViT

Metadata:

Architecture: MViT-small

@@ -78,6 +100,29 @@ Models:

Top 5 Accuracy: 91.0

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_u16_sthv2-rgb_20221021-65ecae7d.pth

+ - Name: mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb

+ Config: configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

+ In Collection: MViT

+ Metadata:

+ Architecture: MViT-small

+ Batch Size: 16

+ Epochs: 100

+ FLOPs: 64G

+ Parameters: 34.4M

+ Pretrained: Kinetics-400

+ Resolution: 224x224

+ Training Data: SthV2

+ Training Resources: 16 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: SthV2

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 68.2

+ Top 5 Accuracy: 91.3

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb_20230201-4065c1b9.pth

+

- Name: mvit-base-p244_u32_sthv2-rgb

Config: configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

In Collection: MViT

@@ -113,3 +158,26 @@ Models:

Top 1 Accuracy: 73.2

Top 5 Accuracy: 94.0

Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-large-p244_u40_sthv2-rgb_20221021-61696e07.pth

+

+ - Name: mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb

+ Config: configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py

+ In Collection: MViT

+ Metadata:

+ Architecture: MViT-small

+ Batch Size: 32

+ Epochs: 100

+ FLOPs: 71G

+ Parameters: 36.4M

+ Pretrained: Kinetics-400 MaskFeat

+ Resolution: 224x224

+ Training Data: Kinetics-400

+ Training Resources: 8 GPUs

+ Modality: RGB

+ Results:

+ - Dataset: Kinetics-400

+ Task: Action Recognition

+ Metrics:

+ Top 1 Accuracy: 81.8

+ Top 5 Accuracy: 95.2

+ Training Log: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.log

+ Weights: https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb_20230201-5bced1d0.pth

diff --git a/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py b/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

index b1e186f195..fb552c9329 100644

--- a/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

+++ b/configs/recognition/mvit/mvit-base-p244_32x3x1_kinetics400-rgb.py

@@ -76,13 +76,17 @@

dict(type='PackActionInputs')

]

+repeat_sample = 2

train_dataloader = dict(

batch_size=8,

num_workers=8,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

dataset=dict(

- type=dataset_type,

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ sample_once=True,

ann_file=ann_file_train,

data_prefix=dict(video=data_root),

pipeline=train_pipeline))

@@ -113,19 +117,21 @@

test_evaluator = val_evaluator

train_cfg = dict(

- type='EpochBasedTrainLoop', max_epochs=30, val_begin=1, val_interval=3)

+ type='EpochBasedTrainLoop', max_epochs=200, val_begin=1, val_interval=1)

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

+base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

- type='AdamW', lr=1.6e-3, betas=(0.9, 0.999), weight_decay=0.05))

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

+ paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0),

+ clip_grad=dict(max_norm=1, norm_type=2))

param_scheduler = [

dict(

type='LinearLR',

- start_factor=0.1,

+ start_factor=0.01,

by_epoch=True,

begin=0,

end=30,

@@ -133,9 +139,9 @@

dict(

type='CosineAnnealingLR',

T_max=200,

- eta_min=0,

+ eta_min=base_lr / 100,

by_epoch=True,

- begin=0,

+ begin=30,

end=200,

convert_to_iter_based=True)

]

@@ -147,4 +153,4 @@

# - `enable` means enable scaling LR automatically

# or not by default.

# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

-auto_scale_lr = dict(enable=False, base_batch_size=64)

+auto_scale_lr = dict(enable=False, base_batch_size=512 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py b/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

index c954b60b54..cdbf22dd1f 100644

--- a/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

+++ b/configs/recognition/mvit/mvit-base-p244_u32_sthv2-rgb.py

@@ -108,7 +108,6 @@

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05))

diff --git a/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py b/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py

index 8c93519914..f2d7ef1419 100644

--- a/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py

+++ b/configs/recognition/mvit/mvit-large-p244_40x3x1_kinetics400-rgb.py

@@ -13,12 +13,6 @@

type='ActionDataPreprocessor',

mean=[114.75, 114.75, 114.75],

std=[57.375, 57.375, 57.375],

- blending=dict(

- type='RandomBatchAugment',

- augments=[

- dict(type='MixupBlending', alpha=0.8, num_classes=400),

- dict(type='CutmixBlending', alpha=1, num_classes=400)

- ]),

format_shape='NCTHW'),

cls_head=dict(in_channels=1152),

test_cfg=dict(max_testing_views=5))

@@ -78,13 +72,17 @@

dict(type='PackActionInputs')

]

+repeat_sample = 2

train_dataloader = dict(

batch_size=8,

num_workers=8,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

dataset=dict(

- type=dataset_type,

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ sample_once=True,

ann_file=ann_file_train,

data_prefix=dict(video=data_root),

pipeline=train_pipeline))

@@ -119,26 +117,21 @@

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

+base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

- type='AdamW', lr=1.6e-3, betas=(0.9, 0.999), weight_decay=0.05))

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=10e-8),

+ paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0),

+ clip_grad=dict(max_norm=1, norm_type=2))

param_scheduler = [

- dict(

- type='LinearLR',

- start_factor=0.1,

- by_epoch=True,

- begin=0,

- end=30,

- convert_to_iter_based=True),

dict(

type='CosineAnnealingLR',

- T_max=200,

+ T_max=30,

eta_min=0,

by_epoch=True,

begin=0,

- end=200,

+ end=30,

convert_to_iter_based=True)

]

@@ -149,4 +142,4 @@

# - `enable` means enable scaling LR automatically

# or not by default.

# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

-auto_scale_lr = dict(enable=True, base_batch_size=512)

+auto_scale_lr = dict(enable=True, base_batch_size=128 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py b/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py

index b3fde41a78..ea9d54c068 100644

--- a/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py

+++ b/configs/recognition/mvit/mvit-large-p244_u40_sthv2-rgb.py

@@ -110,7 +110,6 @@

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05))

diff --git a/configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py b/configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

similarity index 91%

rename from configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py

rename to configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

index 4da89b5a4a..9f6b1cbd6d 100644

--- a/configs/recognition/mvit/mvit-small-p244_16x4x1_kinetics400-rgb.py

+++ b/configs/recognition/mvit/mvit-small-p244_32xb16-16x4x1-200e_kinetics400-rgb.py

@@ -24,6 +24,7 @@

ann_file_test = 'data/kinetics400/kinetics400_val_list_videos.txt'

file_client_args = dict(io_backend='disk')

+

train_pipeline = [

dict(type='DecordInit', **file_client_args),

dict(type='SampleFrames', clip_len=16, frame_interval=4, num_clips=1),

@@ -70,13 +71,17 @@

dict(type='PackActionInputs')

]

+repeat_sample = 2

train_dataloader = dict(

batch_size=8,

num_workers=8,

persistent_workers=True,

sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

dataset=dict(

- type=dataset_type,

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ sample_once=True,

ann_file=ann_file_train,

data_prefix=dict(video=data_root),

pipeline=train_pipeline))

@@ -107,20 +112,21 @@

test_evaluator = val_evaluator

train_cfg = dict(

- type='EpochBasedTrainLoop', max_epochs=200, val_begin=1, val_interval=3)

+ type='EpochBasedTrainLoop', max_epochs=200, val_begin=1, val_interval=1)

val_cfg = dict(type='ValLoop')

test_cfg = dict(type='TestLoop')

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

- type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05))

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

+ paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0),

+ clip_grad=dict(max_norm=1, norm_type=2))

param_scheduler = [

dict(

type='LinearLR',

- start_factor=0.1,

+ start_factor=0.01,

by_epoch=True,

begin=0,

end=30,

@@ -142,4 +148,4 @@

# - `enable` means enable scaling LR automatically

# or not by default.

# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

-auto_scale_lr = dict(enable=True, base_batch_size=512)

+auto_scale_lr = dict(enable=True, base_batch_size=512 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py b/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py

new file mode 100644

index 0000000000..6fa2a5e654

--- /dev/null

+++ b/configs/recognition/mvit/mvit-small-p244_k400-maskfeat-pre_8xb32-16x4x1-100e_kinetics400-rgb.py

@@ -0,0 +1,158 @@

+_base_ = [

+ '../../_base_/models/mvit_small.py', '../../_base_/default_runtime.py'

+]

+

+model = dict(

+ backbone=dict(

+ drop_path_rate=0.1,

+ dim_mul_in_attention=False,

+ pretrained= # noqa: E251

+ 'https://download.openmmlab.com/mmselfsup/1.x/maskfeat/maskfeat_mvit-small_16xb32-amp-coslr-300e_k400/maskfeat_mvit-small_16xb32-amp-coslr-300e_k400_20230131-87d60b6f.pth', # noqa

+ pretrained_type='maskfeat',

+ ),

+ data_preprocessor=dict(

+ type='ActionDataPreprocessor',

+ mean=[114.75, 114.75, 114.75],

+ std=[57.375, 57.375, 57.375],

+ blending=dict(

+ type='RandomBatchAugment',

+ augments=[

+ dict(type='MixupBlending', alpha=0.8, num_classes=400),

+ dict(type='CutmixBlending', alpha=1, num_classes=400)

+ ]),

+ format_shape='NCTHW'),

+ cls_head=dict(dropout_ratio=0., init_scale=0.001))

+

+# dataset settings

+dataset_type = 'VideoDataset'

+data_root = 'data/kinetics400/videos_train'

+data_root_val = 'data/kinetics400/videos_val'

+ann_file_train = 'data/kinetics400/kinetics400_train_list_videos.txt'

+ann_file_val = 'data/kinetics400/kinetics400_val_list_videos.txt'

+ann_file_test = 'data/kinetics400/kinetics400_val_list_videos.txt'

+

+file_client_args = dict(io_backend='disk')

+train_pipeline = [

+ dict(type='DecordInit', **file_client_args),

+ dict(type='SampleFrames', clip_len=16, frame_interval=4, num_clips=1),

+ dict(type='DecordDecode'),

+ dict(type='Resize', scale=(-1, 256)),

+ dict(type='PytorchVideoWrapper', op='RandAugment', magnitude=7),

+ dict(type='RandomResizedCrop'),

+ dict(type='Resize', scale=(224, 224), keep_ratio=False),

+ dict(type='Flip', flip_ratio=0.5),

+ dict(type='RandomErasing', erase_prob=0.25, mode='rand'),

+ dict(type='FormatShape', input_format='NCTHW'),

+ dict(type='PackActionInputs')

+]

+val_pipeline = [

+ dict(type='DecordInit', **file_client_args),

+ dict(

+ type='SampleFrames',

+ clip_len=16,

+ frame_interval=4,

+ num_clips=1,

+ test_mode=True),

+ dict(type='DecordDecode'),

+ dict(type='Resize', scale=(-1, 256)),

+ dict(type='CenterCrop', crop_size=224),

+ dict(type='FormatShape', input_format='NCTHW'),

+ dict(type='PackActionInputs')

+]

+test_pipeline = [

+ dict(type='DecordInit', **file_client_args),

+ dict(

+ type='SampleFrames',

+ clip_len=16,

+ frame_interval=4,

+ num_clips=10,

+ test_mode=True),

+ dict(type='DecordDecode'),

+ dict(type='Resize', scale=(-1, 224)),

+ dict(type='CenterCrop', crop_size=224),

+ dict(type='FormatShape', input_format='NCTHW'),

+ dict(type='PackActionInputs')

+]

+

+repeat_sample = 2

+train_dataloader = dict(

+ batch_size=16,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=True),

+ collate_fn=dict(type='repeat_pseudo_collate'),

+ dataset=dict(

+ type='RepeatAugDataset',

+ num_repeats=repeat_sample,

+ ann_file=ann_file_train,

+ data_prefix=dict(video=data_root),

+ pipeline=train_pipeline))

+val_dataloader = dict(

+ batch_size=8,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=dict(

+ type=dataset_type,

+ ann_file=ann_file_val,

+ data_prefix=dict(video=data_root_val),

+ pipeline=val_pipeline,

+ test_mode=True))

+test_dataloader = dict(

+ batch_size=1,

+ num_workers=8,

+ persistent_workers=True,

+ sampler=dict(type='DefaultSampler', shuffle=False),

+ dataset=dict(

+ type=dataset_type,

+ ann_file=ann_file_test,

+ data_prefix=dict(video=data_root_val),

+ pipeline=test_pipeline,

+ test_mode=True))

+

+val_evaluator = dict(type='AccMetric')

+test_evaluator = val_evaluator

+

+train_cfg = dict(

+ type='EpochBasedTrainLoop', max_epochs=100, val_begin=1, val_interval=1)

+val_cfg = dict(type='ValLoop')

+test_cfg = dict(type='TestLoop')

+

+base_lr = 9.6e-3 # for batch size 512

+optim_wrapper = dict(

+ optimizer=dict(

+ type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

+ constructor='LearningRateDecayOptimizerConstructor',

+ paramwise_cfg={

+ 'decay_rate': 0.75,

+ 'decay_type': 'layer_wise',

+ 'num_layers': 16

+ },

+ clip_grad=dict(max_norm=5, norm_type=2))

+

+param_scheduler = [

+ dict(

+ type='LinearLR',

+ start_factor=1 / 600,

+ by_epoch=True,

+ begin=0,

+ end=20,

+ convert_to_iter_based=True),

+ dict(

+ type='CosineAnnealingLR',

+ T_max=80,

+ eta_min_ratio=1 / 600,

+ by_epoch=True,

+ begin=20,

+ end=100,

+ convert_to_iter_based=True)

+]

+

+default_hooks = dict(

+ checkpoint=dict(interval=3, max_keep_ckpts=20), logger=dict(interval=100))

+

+# Default setting for scaling LR automatically

+# - `enable` means enable scaling LR automatically

+# or not by default.

+# - `base_batch_size` = (8 GPUs) x (8 samples per GPU).

+auto_scale_lr = dict(enable=True, base_batch_size=512 // repeat_sample)

diff --git a/configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py b/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

similarity index 91%

rename from configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py

rename to configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

index 08934b9a5e..1b4135b52e 100644

--- a/configs/recognition/mvit/mvit-small-p244_u16_sthv2-rgb.py

+++ b/configs/recognition/mvit/mvit-small-p244_k400-pre_16xb16-u16-100e_sthv2-rgb.py

@@ -2,7 +2,14 @@

'../../_base_/models/mvit_small.py', '../../_base_/default_runtime.py'

]

-model = dict(cls_head=dict(num_classes=174))

+model = dict(

+ backbone=dict(

+ init_cfg=dict(

+ type='Pretrained',

+ checkpoint= # noqa: E251

+ 'https://download.openmmlab.com/mmaction/v1.0/recognition/mvit/converted/mvit-small-p244_16x4x1_kinetics400-rgb_20221021-9ebaaeed.pth', # noqa: E501

+ prefix='backbone.')),

+ cls_head=dict(num_classes=174))

# dataset settings

dataset_type = 'VideoDataset'

@@ -91,7 +98,6 @@

base_lr = 1.6e-3

optim_wrapper = dict(

- type='AmpOptimWrapper',

optimizer=dict(

type='AdamW', lr=base_lr, betas=(0.9, 0.999), weight_decay=0.05),

paramwise_cfg=dict(norm_decay_mult=0.0, bias_decay_mult=0.0))

diff --git a/configs/recognition/omnisource/README.md b/configs/recognition/omnisource/README.md

new file mode 100644

index 0000000000..64acf52c35

--- /dev/null

+++ b/configs/recognition/omnisource/README.md

@@ -0,0 +1,79 @@

+# Omnisource

+

+

+

+

+

+## Abstract

+

+

+

+We propose to train a recognizer that can classify images and videos. The recognizer is jointly trained on image and video datasets. Compared with pre-training on the same image dataset, this method can significantly improve the video recognition performance.

+

+

+

+## Results and Models

+

+### Kinetics-400

+

+| frame sampling strategy | scheduler | resolution | gpus | backbone | joint-training | top1 acc | top5 acc | testing protocol | FLOPs | params | config | ckpt | log |